Sign up with Facebook or Sign up manually

Already have an account? Log in here.

Nathan Landman, Hannah Pang, Christopher Williams, and

- Eli Ross

contributed

K-means clustering is a traditional, simple machine learning algorithm that is trained on a test data set and then able to classify a new data set using a prime, \(k\) number of clusters defined a priori.

Data mining can produce incredible visuals and results. Here, k-means algorithm was used to assign items to 1000 clusters, each represented by a color [1] .

Data mining can produce incredible visuals and results. Here, k-means algorithm was used to assign items to 1000 clusters, each represented by a color [1] .

An objective of machine learning is to derive techniques for unsupervised learning on data. This kind of data analysis is very helpful in many applications that require classification of data, such as identifying cancerous cells within a large sample, clustering words with similar definitions for better search engine accuracy, identifying outliers in student’s academic performance for better refinement of habits, or even for detecting landmines in a battlefield [2].

Building a Baseball Team Using Classification

Imagine a high school baseball coach wants to use data analysis to predict whether potential new recruits will be spectacular, mediocre, or dismal.

He has data on the players currently on his team: position, years of experience, batting average, on-base percentage, and number of stolen bases per games. He also has some data on the players he is considering recruiting. How might he go about selecting a new player that fills any gaps of skill currently on his team?

Contents

- K-means algorithm

- Technical Analysis

- Complexity

- Example

- When to Use K-Means Clustering

- References

K-means algorithm

This clustering algorithm separates data into the best suited group based on the information the algorithm already has. Data is separated in \(k\) different clusters, which are usually chosen to be far enough apart from each other spatially, in Eucledian Distance, to be able to produce effective data mining results. Each cluster has a center, called the centroid, and a data point is clustered into a certain cluster based on how close the features are to the centroid.

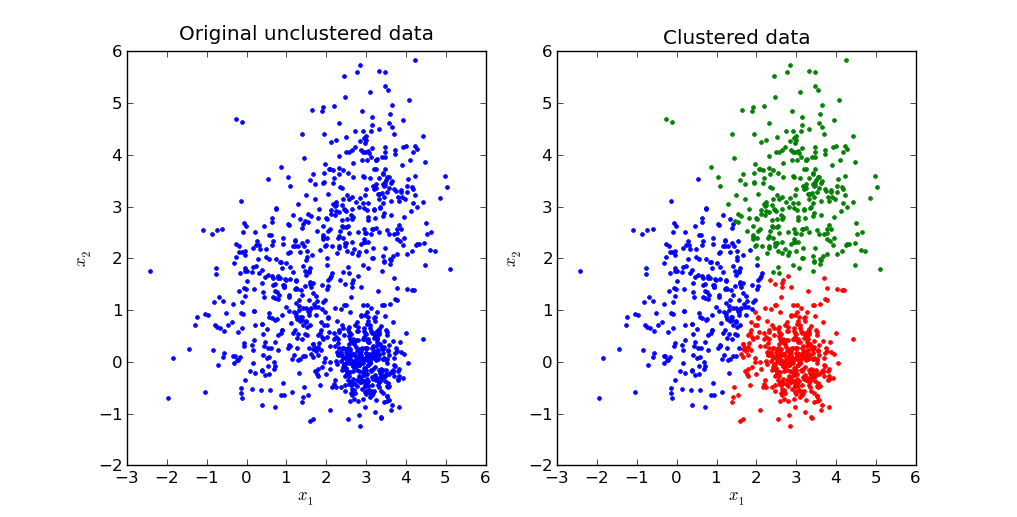

A computer-generated program showing k-means clustering [3]

A computer-generated program showing k-means clustering [3]

K-means algorithm iteratively minimizes the distances between every data point and its centroid in order to find the most optimal solution for all the data points.

- \(k\) random points of the data set are chosen to be centroids.

- Distances between every data point and the \(k\) centroids are calculated and stored.

- Based on distance calculates, each point is assigned to the nearest cluster

- New cluster centroid positions are updated: similar to finding a mean in the point locations

- If the centroid locations changed, the process repeats from step 2, until the calculated new center stays the same, which signals that the clusters' members and centroids are now set.

Finding the minimal distances between all the points implies that data points have been separated in order to form the most compact clusters possible, with the least variance within them. In other words, no other iteration could have a lower average distance between the centroids and the data points found within them.

A graphical look at k-means clustering [4]

Technical Analysis

The K-means algorithm defined above aims at minimizing an objective function, which in this case is the squared error function.

The objective function for the K-means clustering algorithm is the squared error function:

\(J = \sum_{i=1}^{k} \sum_{j=1}^{n} (||x_ i – v_j||)^2 = 1 \)

where,

\(||x_i – v_j|| \) is the Eucledian distance between a point, \(x_i\), and a centroid, \(v_j\), iterated over all k points in the \(i^{th}\) cluster, for all n clusters.

[5]

In simpler terms, the objective function attempts to pick centroids that minimize the distance to all points belonging to its respective cluster so that the centroids are more symbolic of the surrounding cluster of data points.

Pseudocode

1 2 3 4 5 6 7 8 91011121314151617181920212223242526272829 | |

Why use the squared error function rather than, say, the absolute error? The reason is because the squared error has nicer mathematical properties than absolute error. For further reference on these properties, check out this blog post by Ben Khun.

Complexity

Although the algorithm seems quite simple, finding the optimal solution to the problem for observations in either d dimensions or for k clusters is NP-Hard. However, if k and d are fixed, the problem can be solved in time \(O(n^{dk+1}log(n))\) using Lloyd’s Algorithm, a common k-clustering algorithm, where n is the number of entities to be clustered.[6]. However, the running time for this algorithm on nicely clustered data can be quite small, as minimization of the objective function will occur quickly.

Because the algorithm computes the distances between each of the k cluster centers and their respective data points every single iteration, a few iterations are enough to make further adjustments not worth the time complexity trade-off. In other words, because further iterations won’t change the assignments of the majority of data points but their distances still need to be calculated, the algorithm becomes inefficient if convergence is the goal. For this reason, several variations of Lloyd’s k-means clustering algorithm have been developed in order to speed up the process at later stages; where these variations include using the triangle-inequality, amongst others.

Example

Before applying k-means clustering to a data set, data has to go from characteristics of an object to numerical data that can be analyzed.

Categorizing Baseball Players

How would the baseball coach use k-means clustering to predict whether new recruits will be good? Each trait of a player can be represented as a feature vector. By converting characteristics into numbers in a feature vector, the players become comparable in a vector space so that their differences can be better quantified.

The coach has the same type of information on both current players and new potential ones. Using k-means clustering on the entire set of data points, he can figure out which of his current, known-level (remarkable, mediocre, dismal) players are closest to the new ones, and which of the new players would fill the most voids within his team.

When to Use K-Means Clustering

K-Means clustering is a fast, robust, and simple algorithm that gives reliable results when data sets are distinct or well separated from each other in a linear fashion. It is best used when the number of cluster centers, is specified due to a well-defined list of types shown in the data. However, it is important to keep in mind that K-Means clustering may not perform well if it contains heavily overlapping data, if the Euclidean distance does not measure the underlying factors well, or if the data is noisy or full of outliers [7].

References

- Brickley, D. 1k_overview. Retrieved from https://www.flickr.com/photos/danbri/6233990550/in/photolist-auSQcG-87Yxj7-EmVCps-ptEDsu-7m1WFX-5EFMse-i4x1v-egRfqk-81wUmZ-a4D73j-87Yxiy-tty5TD-bAhHzE-5tMAuK-7MfnED-7rbggn-7rfbmm-rq7j4f-a1hTYE-gxCMpH-57XUAW-a6Nx34-8hAC1D-ounzPd-dybf7L-fCTKB3-dybeZd-dy5Mut-dybfdb-ow6s3X-wcCF9s-cqjRDS-rrXXND-dybfjm-fCBeqB-dy5MGx-fCTM13-nouGAk-71tzae-4xwxcC-k2tddi-8kfyKH-8hABZB-7y4a3Y-ou3Zst-ftDTEu-osZ261-oun8p5-cSNeMy-4eJiRW

- Naik, A. Clustering Algorithm Applications. Retrieved from https://sites.google.com/site/dataclusteringalgorithms/clustering-algorithm-applications

- Petersen, J. K-means. Retrieved from http://pypr.sourceforge.net/kmeans.html#k-means-example

- None, N. k-means clustering. Retrieved May 02, 2016, from https://en.wikipedia.org/wiki/K-means_clustering

- Matteucci, M. K-Means Clustering. Retrieved June 14, 2016, from http://home.deib.polimi.it/matteucc/Clustering/tutorial_html/kmeans.html

- Inaba, M. (1994). Applications of weighted Voronoi diagrams and randomization to variance-based k-clustering. ACM Symposium on Computational Geometry, 10th, 332-339.

- Naik, a. k-Means Clustering Algorithm. Retrieved June 14,2016, from https://sites.google.com/site/dataclusteringalgorithms/k-means-clustering-algorithm

Cite as: k-Means Clustering. Brilliant.org. Retrieved from https://brilliant.org/wiki/k-means-clustering/